Key Terms & Concepts

Photo by chuttersnap on Unsplash

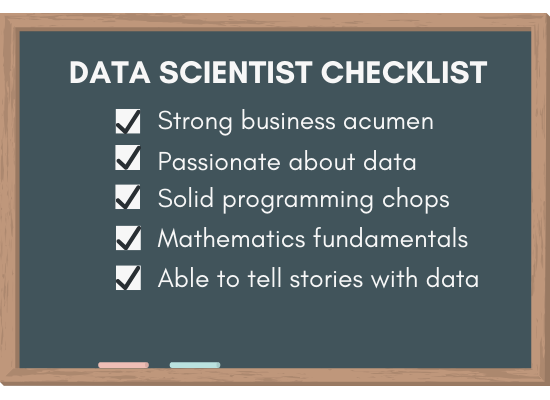

What does a Data Scientist do?

Frequently regarded as "one of the sexiest careers of the 21st century", I can assure you from my first hand experience that it's only mildly interesting. The term Data Science is a marketing phenomenon, and the Data Scientist job title has become an umbrella term for anyone who works with data. Nevertheless, I can't see myself working in any other role. I love the wide variety of problems I get to work on and being able to wear many different hats.

Data Science is a multidisciplinary field that combines statistics, computer science and business intelligence to extract meaningful information from data. If you're lucky, you will get to work on flashy Machine Learning models for about 10% of the time. Understanding business problems, data wrangling and integrating your solution with existing business systems makes up the other 90%.

The flashiest part about being a Data Scientist is building models -- systems that learn by finding and applying patterns from previous observations. These models quantify real world phenomenons and define the meaning of artificial intelligence.

We frequently use the term "training" to refer to model development (i.e. training a model).

What exactly are Models?

Models are mathematical expressions that use a set of parameters to generate inference for new observations. For instance, a salesperson can use their sales history to model revenue as a function of leads.

If we let y be the number of sales and x be the number of leads, then we can construct a simple linear model to explain their relationship. Previous data might tell us:

$$ \hat{y} = 0.2 \times x$$

The ^ symbol (we call this a hat) denotes an estimation -- this model helps us estimate sales based on number of leads. In this case, we have a single parameter in the form of our coefficient 0.2. The estimated value(s) of our parameter(s) are determined via the training process.

Training vs Testing

We should always reserve part of our data for testing. Never test your model on the same data used to develop it. This is known as data leakage and it leads to overfitting which overestimates the model's performance on subsequent observations.

Typically, we want to split the data into multiple sets: a training set for model development and a test set for model evaluation. The goal of the test set is to provide a realistic and unbiased evaluation of model performance on subsequent observations. The typical split is to allocate 80% of the data for training and 20% for testing.

Target and Explanatory Variables

Models are defined by their variables. The variables whose values are determined during the training process are known as parameters. Variables whose values are determined prior to the training process are known as hyperparameters. Hyperparameters can be tuned to improve model performance.

We pass explanatory variables to the model to generate a new prediction. The model will output the target variable based on these inputs.

There are many ways of referring to these variables:

Supervised vs Unsupervised Learning

These two categorizations (+ semi-supervised learning) classifies algorithms based on the data that is available to the model. In our sales example, the model ingests an independent variable (the number of leads) to output a response which is dependent on these inputs.

When we have a clearly defined set of model inputs and desired output, we can use a supervised learning algorithm to quantify their relationship. When we have a clearly defined input, but not a clearly defined output, we need to rely on unsupervised learning algorithms to draw inference from the independent variables. Clustering is a set of unsupervised learning techniques used to group data points based on similarities within each group and dissimilarities between groups.

It is (almost) always more desirable to use supervised learning when we are able to do so. Oftentimes, we have to resort to unsupervised learning because the target is not properly labelled.

There's a third category known as semi-supervised learning. Semi-supervised learning addresses the scenario where we have labels for some of our observations. The classic semi-supervised learning approach is to train a model on the labelled data, use this model to infer the remaining missing labels, convert confident predictions to definite labels, retrain the model over the new labels and repeat until all data is labelled.

Supervised learning: Regression vs Classification

All supervised algorithms can be further categorized into regression or classification. Recall that we have both a well defined input and output in a supervised learning problem.

Regression explains/predicts the relationship between independent variables and a continuous dependent variable. We can use regression analysis to predict house prices, blood pressure or revenue.

Classification explains/predicts the relationship between independent variables and a categorical dependent variable. We can use a classifier to predict user sentiment and to classify objects into different groups.

Quantifying Model Performance

After we define the problem we want to solve, the next step is to build and compare several candidate models. We use performance metrics to quantify model performance to determine which model works the best for our use case. One of the most straightforward performance metrics is accuracy, i.e. the percentage of correct predictions.

Is accuracy always a good indicator of model performance?

Not always. This can be illustrated with two examples.

If we take accuracy at face value, we might be inclined to say Jim's model is performing poorly and Mary's model is performing well. A closer analysis reveals the opposite result.

Even though Jim can never predict the exact house price, his model is useful because it has such a small error margin. Mary's model may be accurate, but has a tendency to evaluate fraudulent purchases as legitimate.

The moral of these anecdotes is that accuracy is a poor indicator for regression analysis and imbalanced datasets.

Mean Squared Error (MSE) for Regression Analysis

A much more suitable metric for regression analysis is Mean Squared Error. Mean Squared Error averages the squared difference between the actual and predicted values.

Consider Jim's model on two data points:

Let's use \(y_i\) to represent true house price of house \(i\). Remember that we use the ^ to denote estimates. The MSE for these predictions is calculated as:

$$\begin{aligned} MSE &= \frac{1}{2}\sum_{i=1}^{2} (y_i - \hat{y_i})^2 \\ &= \frac{(500,900-501,000)^2 + (449,800-450,000)^2}{2} \\ &= \frac{100^2 + 200^2}{2} \\ &= 25000 \end{aligned}$$

The Mean Squared Error of Jim's model is 25,000 dollars. To make interpretation easier, we can also use the Mean Absolute Error (MAE) rather than the Mean Squared Error.

$$\begin{aligned} MAE &= \frac{1}{2}\sum_{i=1}^{2} |y_i - \hat{y_i}| \\ &= \frac{|500,900-501,000| + |449,800-450,000|}{2} \\ &= \frac{100 + 200}{2} \\ &= 150 \end{aligned}$$

The Mean Absolute Error of Jim's model is 150 dollars.

F1-score for Imbalanced Datasets

Let's introduce a few terms to help understand the performance of Mary's model. These terms are specific to binary classification (classification with two classes). We will designate fraudulent purchases as "positive" cases.

| Predicted: fraudulent | Predicted: legitimate | |

|---|---|---|

| Actual: fraudulent | True Positive | False Negative |

| Actual: legitimate | False Positive | True Negative |

An ideal model will maximize TP and TN while minimizing FP and FN. While a False Positive and a False Negative both indicate a misclassification, the costs to these mistakes are not necessarily equal.

With these terms, we can calculate some metrics:

If we were more concerned with False Positives, we would want to maximize Precision. If we were more concerned with False Negatives, we would want to maximize Recall.

The F1-score is a common metric used for binary classification. It is the harmonic mean of Precision and Recall:

$$F_1 = \frac{2 \times precision \times recall}{precision + recall}$$

Let's go back to Mary's model. The precision and recall are both 0. In this case, the F1-score is undefined. We can attribute this edge case to a F1-score of zero.

Precision, Recall and F1 fall into the range of 0 to 1. The closer to 1, the better the performance.

Important: F1-score is only a good performance indicator for imbalanced datasets if there are more negative cases than positive cases.

This is because the number of true positives is used as the numerator when calculating Precision and Recall. The good news is that we can always restructure our model so that the minority class is treated as the "positive" class.

Mary creates a second model with slightly better performance. The table below captures model performance on a test set. This table is known as a confusion matrix.

| Predicted: fraudulent | Predicted: legitimate | |

|---|---|---|

| Actual: fraudulent | 10 | 12 |

| Actual: legitimate | 25 | 120 |

Using this confusion matrix, we can demonstrate the dangers of designating the majority class as the "positive" class.

Case 1: Taking Fraudulent purchases as the "positive" class yields F1-score of 41.6%.

\(precision = \frac{10}{10 + 25} = 0.286\), \(recall = \frac{10}{10 + 12} = 0.455\), \(F_1 = \frac{2 \times 0.286 \times 0.455}{0.286 + 0.455} = 0.351\)

Case 2: Taking Legitimate purchases as the "positive" class yields F1-score of 86.6%.

\(precision = \frac{120}{120 + 12} = 0.909\), \(recall = \frac{120}{120 + 25} = 0.828\), \(F_1 = \frac{2 \times 0.909 \times 0.828}{0.909 + 0.828} = 0.866\)

How exactly do you train a model?

This step has been greatly simplified by the amount of tooling available to us. The actual training process can be achieved in several lines of code. The much harder part is:

Let's assume we have a well defined business problem and the data to solve it. Then, the next step is usually feature engineering, the series of transformation steps applied to the raw input variables prior to the training phrase.

After we have the inputs prepared, we test and evaluate candidate models to determine the most suitable one.

The final model is then deployed and integrated as a part of a larger business system.